AI Governance Guide: Ensuring Compliance, Accountability, and Transparency in LLM Integrations

As organizations embrace large language models (LLMs) and other AI systems, compliance and accountability have become top-of-mind. Businesses in finance, healthcare, government, and beyond are integrating AI into critical workflows – from customer support chatbots to automated decision tools – raising the stakes for oversight. Leaders are asking: How do we ensure our AI’s decisions are transparent and traceable? Who is accountable if something goes wrong?

Those questions point to the need for robust AI governance practices centered on logging, transparency, and risk management. Without logs and evidence, “responsible AI” is just a slogan. In this guide, we outline how organizations can approach AI governance through comprehensive logging and accountability, which types of businesses and roles should lead the charge, and what solution requirements are typical. We’ll also walk through realistic case studies and a FAQ to help you get started.

Why Compliance and Accountability in AI Matter

AI governance isn’t just a buzzword – it’s a strategic imperative for any organization using AI at scale. A few reasons:

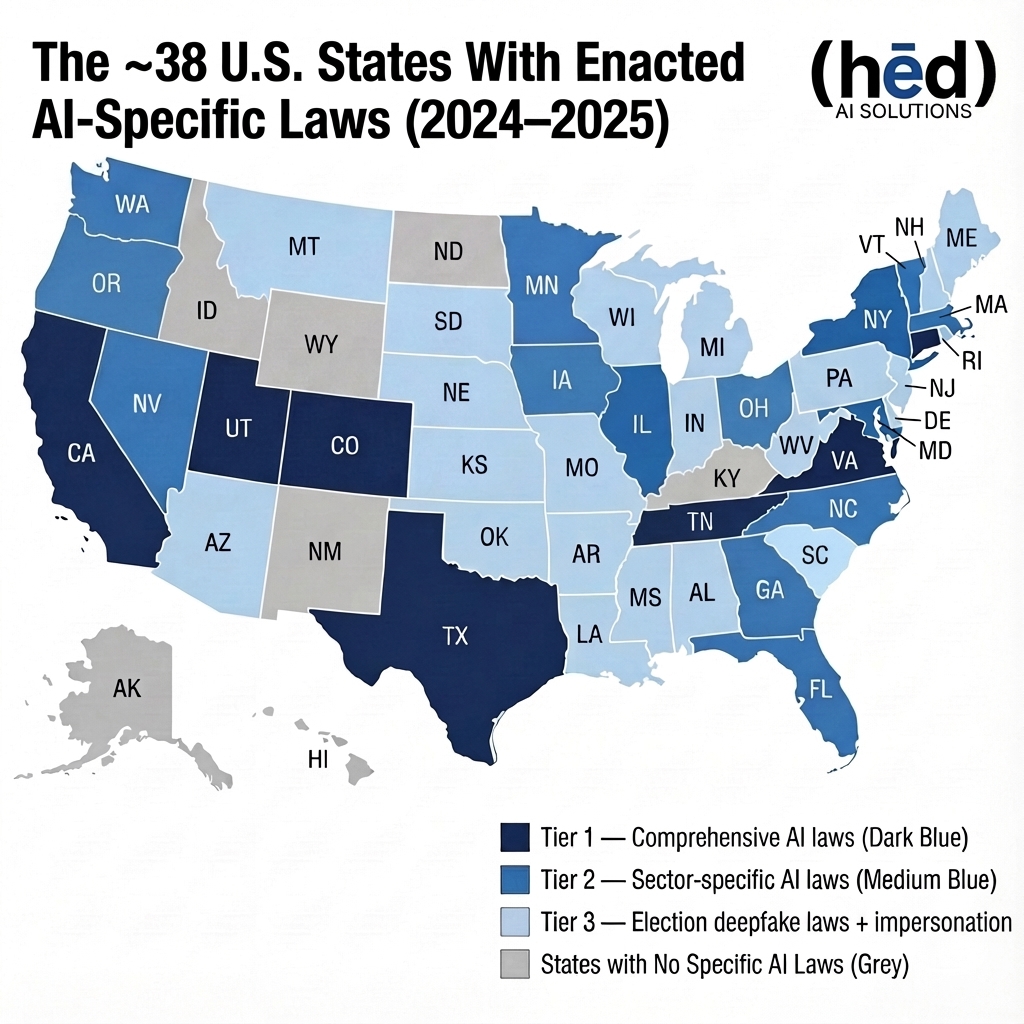

- Regulatory requirements. Regulators are enacting rules to ensure AI is used responsibly. The EU AI Act, GDPR, HIPAA, financial regulations, and sector guidelines all expect strong controls over automated decisions and the data they touch. Using AI does not remove your obligations – it usually expands them.

- Ethical responsibility and trust. AI can amplify bias, hallucinate, or make opaque decisions. Without governance, you risk deploying systems that discriminate or violate ethical norms. Demonstrating accountability – being able to explain why an AI made a recommendation – is key to maintaining customer and public trust.

- Risk management and quality control. Accountability and logging provide a safety net. They enable traceability of AI actions so you can quickly identify what happened and why if something goes wrong. Logs support rapid debugging, issue resolution, and continuous quality checks.

- Transparency for stakeholders. Customers, boards, and regulators increasingly demand transparency into AI-driven decisions. Governance practices like logging and reporting give them meaningful visibility instead of hand-waving.

- Auditability and defense. In regulated sectors, you must be audit-ready. Structured evidence of AI operations – inputs, outputs, timestamps, approvals, outcomes – provides a defensible record if auditors or regulators come knocking.

As AI becomes woven into critical business processes, the need for transparency, tracking, and accountability can’t be overstated. Governance is what makes that visibility and control possible.

Core Principles of Effective AI Governance

AI governance is the set of policies, procedures, and controls that ensure AI systems are used responsibly. Key principles:

- Transparency. Stakeholders should understand how AI is being used and how it works at a high level. Maintain an inventory of AI systems, document data sources and intended uses, and avoid “black box” deployments you can’t explain.

- Accountability. Assign clear ownership for AI systems and outcomes. There must be named people responsible for training, deploying, monitoring, and intervening when needed. Human-in-the-loop mechanisms are a core part of accountability.

- Auditability (traceability). Any AI interaction or decision should be reconstructable after the fact. That requires diligent logging of inputs, outputs, model versions, parameters, and any tools or data sources invoked along the way.

- Risk management. Treat AI like any other critical business process: identify risks (bias, security, safety, legal), assess impact, put controls in place, and monitor continually.

- Privacy and security. Ensure AI systems follow data protection principles. Limit and mask personal data where possible, secure logs, and extend your existing security policies – access control, incident response, encryption – to AI and its data flows.

- Continuous improvement and education. Governance is not “set and forget.” Train teams on AI literacy and update policies, controls, and tooling as technology and regulations evolve.

Building an AI Governance Framework

Here’s a practical way to operationalize those principles.

1. Define Policies and Standards

Start by setting clear rules of the road for AI use:

- Where AI can and can’t be used.

- What data is off-limits or requires special handling.

- Approved vs. prohibited use cases (e.g., “no direct legal or investment advice without human review”).

- Model selection standards (e.g., pre-vetted models only, minimum performance/fairness metrics).

Adapt these to your regulatory context: HIPAA for healthcare, financial conduct rules for banking and insurance, public records and accountability laws for government, etc.

2. Assign Roles and Responsibilities

Clarify who owns what in the AI lifecycle. Typical roles:

- AI product / business owner: accountable for outcomes of a given AI application.

- Data science / ML engineering: responsible for model design, training, and technical performance.

- AI compliance / risk officer: ensures deployments meet regulatory, legal, and ethical standards.

- Security / privacy officer: extends data protection and security controls to AI systems and logs.

- Human reviewers / subject-matter experts: provide human-in-the-loop oversight where required.

Use a RACI matrix (Responsible, Accountable, Consulted, Informed) to avoid gaps and overlap.

3. Implement Logging and Monitoring Infrastructure

Logging is the backbone of accountability. For every LLM interaction, log at least:

- Timestamp.

- Requesting user or system identity.

- Input prompt or query (sanitized, if needed).

- Model used (name and version) and key parameters.

- Output text or decision, including any warnings or blocked content.

- Downstream actions (e.g., tools called, records updated).

- Human review or override, if applicable.

Store logs securely in an append-only or tamper-evident form, with strong access controls. Make them searchable so auditors, security, and operations can actually use them.

4. Embed Controls and Guardrails

Don’t just log; actively enforce policy at runtime. Common guardrails:

- Content filters (for sensitive data, toxicity, regulatory violations).

- Policy engines that block disallowed actions (e.g., generating certain categories of advice).

- Role-based access controls so only authorized staff or systems can invoke risky capabilities.

- Automatic redaction or masking of PII/PHI before prompts reach external models.

- Approval workflows where certain outputs must be reviewed before publication.

Many teams route all AI traffic through an “AI gateway” or middleware layer that enforces these controls consistently across all models and vendors.

5. Select the Right Tools and Platforms

Look for platforms or patterns that support:

- End-to-end audit trails and reporting.

- Easy integration with your LLMs (OpenAI, Anthropic, open source, etc.).

- Built-in privacy features (PII detection, masking, encryption).

- Monitoring and alerts on usage, anomalies, and policy violations.

- Bias/fairness evaluation and model versioning.

- Deployment options that match your risk profile (cloud, VPC, on-prem).

6. Pilot First, Then Scale

Pick one meaningful but contained use case – e.g., a customer-support assistant or an internal analytics helper – and implement full governance around it. Use this pilot to stress-test policies, logs, and workflows. Once it’s working, roll the pattern out across other AI applications.

7. Continuous Audit and Improvement

Schedule regular reviews of AI logs, incidents, and metrics. Reassess risks when models, data, or regulations change. Update policies, prompts, and guardrails as you learn. Governance is a living system.

Who Should Prioritize AI Governance?

Any organization using AI for meaningful decisions should adopt governance, but certain sectors are especially urgent:

- Financial services. Banks, insurers, and fintechs rely on strict record-keeping and model risk management. Model decisions around credit, trading, fraud, and advice must be logged and explainable.

- Healthcare and life sciences. AI touches PHI, diagnostics, and treatment paths. Privacy, safety, and human-in-the-loop review are non-negotiable.

- Government and public sector. Public agencies face high transparency and fairness expectations, plus public records obligations.

- Manufacturing and critical infrastructure. AI that influences physical processes or safety-critical operations must be tightly governed.

- Retail, e-commerce, and marketing-heavy businesses. AI-driven targeting, pricing, and messaging can create bias or regulatory risk if not overseen.

- Tech and AI vendors. If you build AI products, strong governance is both a necessity and a selling point for enterprise customers.

Key roles that should be at the table include CIO/CTO, CISO, Chief Data Officer, Chief Compliance/Risk Officer, Legal, and business owners of major AI use cases.

Case Studies: AI Governance in Practice

Case Study 1: FinBank’s Conversational AI Compliance

A global bank deploys an AI assistant for customer support. All conversations pass through an internal AI gateway that logs prompts, responses, referenced knowledge articles, and flags any conversation touching regulated topics (e.g., investment advice, suspicious activity). Compliance teams review samples weekly and use the logs to fine-tune prompts and training data. During a regulator audit, the bank produces six months of structured AI interaction logs showing guardrails in action and no leakage of sensitive data. Result: faster service for customers, and a smooth audit thanks to traceability.

Case Study 2: HealthCo’s Diagnostic Assistant

A hospital network uses an LLM-based assistant to summarize patient notes and suggest possible diagnoses. The AI runs on-premises; prompts are de-identified before processing. Every interaction is logged with a case ID, AI suggestions, and the physician’s final decision. An ethics and quality committee reviews monthly samples to check for bias and clinical soundness. When a patient later questions a decision, the hospital can reconstruct the exact interaction and demonstrate that a qualified physician made the final call with AI as a tool – not a replacement. Governance protects both patients and clinicians.

Case Study 3: RetailCo’s Marketing Content Engine

An e-commerce company uses an LLM to draft product descriptions and campaigns. The system logs the prompt, model version, generated text, and who requested it. Nothing goes live until a marketing manager approves it in a review queue. Legal defines disallowed claim types (e.g., health promises, guaranteed outcomes); the AI gateway blocks or redacts any output that matches those patterns. Over time, the company scales content production dramatically, with zero compliance incidents, because every piece of AI-generated copy is traceable and reviewed.

FAQ: AI Compliance, Logging, and Accountability

Q1: How is AI governance different from traditional IT governance?

A: IT governance focuses on managing technology assets, data, and risks in general. AI governance adds focus on model behavior, bias, explainability, and human oversight. It cares not only about where data lives, but also how models use it and what decisions they make with it.

Q2: We’re a small, unregulated company. Do we still need AI governance?

A: Yes, at an appropriate level. Even if regulators aren’t knocking on your door, customers and partners will care about how you use AI. Minimal governance – basic policies, logging, and review of high-impact use cases – protects you from surprises and builds trust. You can scale up formality as your AI footprint grows.

Q3: What exactly should we log from our LLMs?

A: At least: who/what called the model, when, the (sanitized) input, the output, model/version, key parameters, and any downstream actions or human approvals. For sensitive domains, also log which data sources or tools the model accessed. Then secure and retain those logs according to your regulatory and business needs.

Q4: How do we balance logging with privacy?

A: Use privacy-by-design techniques: mask or tokenize identifiers, minimize what you log, encrypt everything, restrict access, and set clear retention periods. In high-sensitivity contexts, consider de-identification and keeping the re-identification keys in a separate, tightly controlled system.

Q5: Won’t all this slow us down?

A: Done well, governance speeds you up in the long run. Instead of pausing every project for emergency legal or security reviews, you embed guardrails into your standard pipeline. Teams can ship with confidence knowing they’re operating within clear boundaries.

Q6: How do we get started if we have almost nothing in place?

A: Start small and pragmatic:

- Educate stakeholders on AI risks and responsibilities.

- Inventory current and planned AI use cases.

- Draft a simple AI use policy and get leadership buy-in.

- Implement logging and human review for one pilot use case.

- Refine based on what you learn, then expand to other systems.

Closing Thoughts

AI can be a powerful accelerator for your business – or a source of hidden risk. The difference is governance. With clear policies, strong logging, meaningful guardrails, and ongoing oversight, you can deploy AI systems that are both effective and trustworthy.

If you want help designing or implementing an AI governance program – from policy drafting to logging architecture and tooling – our team can partner with you to build a framework that fits your industry, risk profile, and growth plans.