ISO 42001 The Baseline for Building Trustworthy AI Integrations

Executives are moving quickly to integrate AI across their organizations, but many are discovering the same reality: the technology is advancing faster than the controls required to manage it responsibly. The result is a widening gap between AI innovation and the frameworks needed to govern its impact on operations, customers, and the business as a whole.

Over the past year, that gap has begun showing up in boardrooms, audits, and—most visibly—in RFPs. Buyers want to know how your organization governs AI. Risk managers want clarity on accountability. Compliance teams want evidence that AI systems aren’t introducing exposure. And CFOs want predictable, defensible systems that won’t surprise them with regulatory or reputational risk.

This is where ISO 42001 enters the picture. Not as a technical standard, but as the baseline for building trustworthy, auditable, and investor-ready AI integrations.

Why ISO 42001 Is Becoming the Expected Minimum

ISO 42001 is the first management standard built specifically for AI. It defines how organizations should design, deploy, and oversee AI in a way that is transparent, traceable, and accountable.

What matters most for business leaders is this:

ISO 42001 shifts AI from “black box” innovation to a structured, governed operating model.

Industry experts expect the standard to become table stakes by 2026–2027—not because regulators will demand it first, but because customers, insurers, investors, and partners will. The expectation is already forming: If AI touches your product or process, prove you can manage the risks that come with it.

For decision-makers, ISO 42001 is essentially a risk management framework for the AI era—and the first one that a third party can formally certify.

The Real Issue Is Trust, Not Technology

Every company touts “best practices” for AI. Every CTO can say they have internal guidelines. But in today’s environment, claims don’t carry weight. Evidence does.

A simple comparison illustrates the dynamic:

Scenario 1: “Trust us, we handle AI responsibly.”

This is equivalent to buying a used car from a seller who swears they serviced it properly—but can’t produce a record. You either walk away or discount heavily because you’re taking on the risk.

Scenario 2: “Here is independent proof of how we manage AI.”

Now the seller provides a full history, a third-party inspection, and documented maintenance. The risk profile changes immediately.

ISO 42001 is the business equivalent of that independent inspection. It confirms your AI practices are not ad hoc or personality-driven—they’re structured, documented, consistent, and proof-based.

For leaders, that distinction is critical. Trust is now a competitive asset.

What ISO 42001 Actually Covers

ISO 42001 doesn’t attempt to define what “good AI” looks like. Instead, it answers the governance questions every executive should be asking:

- Who is accountable when AI influences a decision or outcome?

- How does the organization identify and mitigate AI-related risks?

- Can the business explain and trace AI outputs if questioned by a regulator, insurer, investor, or customer?

- Are models being monitored for drift, bias, quality, or unintended impacts?

- How quickly can the organization respond if AI causes a mistake?

In practice, the standard reinforces three pillars of trustworthy AI:

1. Governance

A clear structure for oversight, accountability, and escalation.

No more “everyone owns AI” and therefore “no one owns AI.”

2. Risk Management

Documented processes for identifying, assessing, and mitigating risks—legal, operational, financial, ethical.

3. Transparency & Traceability

The ability to explain how AI systems work, what data they use, and why they produce certain outputs.

These are the same expectations regulators and insurers are beginning to adopt. ISO 42001 simply organizes them into a unified system.

A Strategic Advantage for Early Adopters

For CEOs, CFOs, and Boards, the upside is twofold.

1. Stronger Market Position

Enterprise buyers are becoming more selective. Vendors who can demonstrate disciplined AI governance move through procurement faster. Those who can’t are increasingly treated as high-risk.

ISO 42001 offers a decisive answer in RFPs and due-diligence reviews:

“Our AI management system is certified to an international standard.”

That single line shortens conversations that would otherwise drag on for weeks.

2. Preparedness for Regulatory Convergence

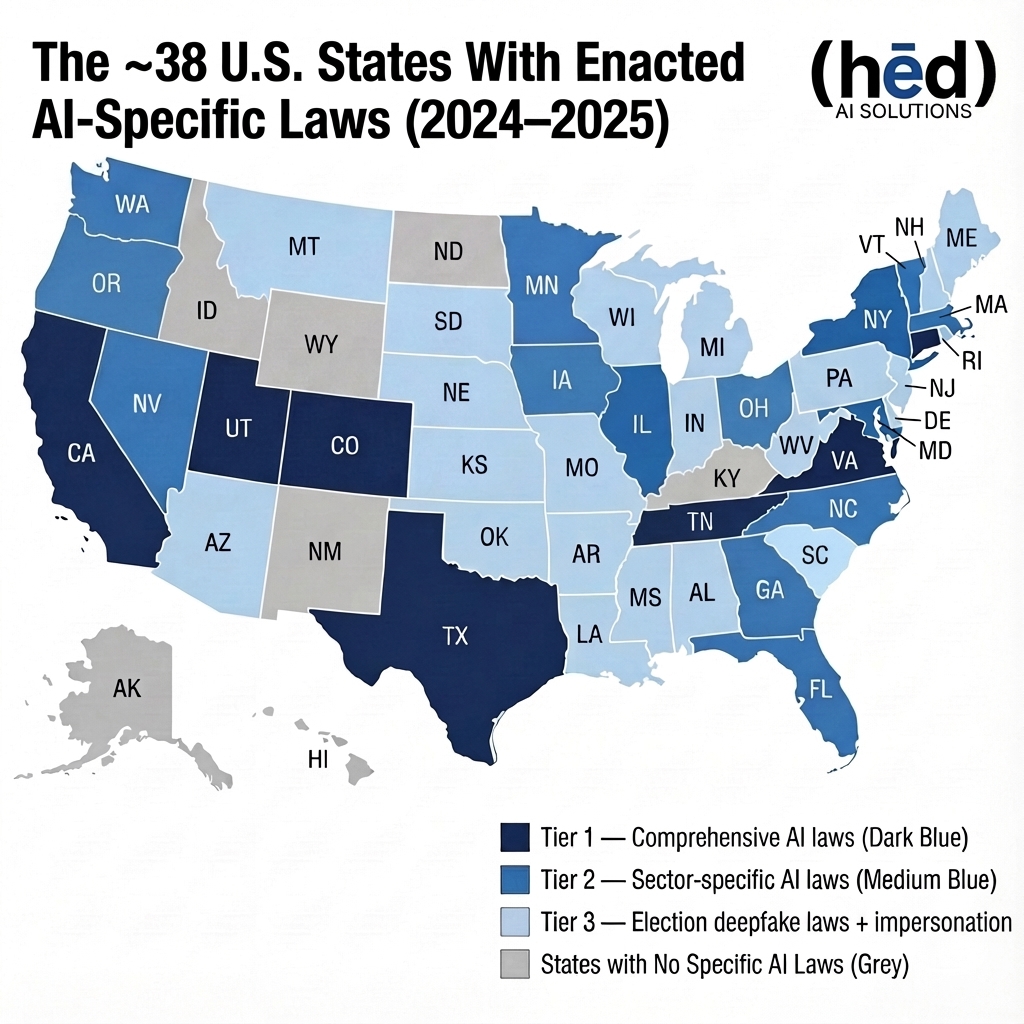

Regulators at the federal and state levels are drafting AI provisions. International frameworks are tightening. While none of this is uniform yet, ISO 42001 aligns with the general direction of global policy.

In other words, adopting ISO 42001 today makes you future-ready, not reactive.

How Organizations Typically Implement ISO 42001

For leadership teams evaluating the path to compliance, the process generally follows four stages:

1. Readiness Assessment

A high-level review of the organization’s current AI landscape:

- Existing controls

- Gaps and exposures

- Areas of immediate risk

- Quick wins

2. Business Case & Roadmap

A concise analysis outlining:

- Estimated effort

- Timeline

- Staff involvement

- Direct and indirect costs

- Expected ROI and risk reduction

This is the document your leadership team uses to decide how to proceed.

3. Implementation & Evidence Gathering

Putting the governance structure into practice:

- Policies

- Procedures

- Monitoring

- Documentation

The goal is operational discipline—not checklists.

4. Independent Audit & Certification

A third party reviews the system and, if conditions are met, issues a formal certificate that can be cited in sales, investor talks, partner agreements, and board reports.

The Cultural Shift Behind ISO 42001

What leaders often overlook is this:

ISO 42001 is not a paperwork exercise. It’s an operational mindset.

The organizations that benefit the most are the ones that treat AI governance as part of their standard risk management approach—no different from financial controls, cybersecurity, or compliance programs.

When that cultural shift takes hold:

- AI deployments become more predictable.

- Risk surfaces earlier.

- Decision-making improves.

- Sales cycles encounter fewer friction points.

- Boards gain confidence that AI is being scaled responsibly.

This is what makes ISO 42001 not only a compliance framework—but a maturity signal.

The Bottom Line for Executives

- AI is no longer experimental. It is shaping pricing models, workflows, customer interactions, and strategic decisions across industries.

- But with that impact comes liability, scrutiny, and the expectation of oversight.

- ISO 42001 is emerging as the baseline for responsible AI integration—not because regulators demanded it, but because the market is moving toward trust-based adoption.

For CEOs, CFOs, COOs, compliance leaders, and owners, the question is no longer:

- “Do we need AI governance?”

- It’s:

- “Are we prepared to prove to customers, partners, and regulators that we’re managing AI responsibly?”

- ISO 42001 is how you answer that with confidence.

- It’s: